Ever had that frustrating, “But I thought it was done!” conversation? You’re not alone. In the fast-paced world of project delivery, the word ‘done’ can be dangerously ambiguous. A weak or nonexistent Definition of Done (DoD) leads to rework, missed deadlines, and creeping technical debt. It’s the silent killer of productivity.

But what if you could eliminate this ambiguity for good? A well-crafted DoD isn’t just another checklist; it’s a shared understanding of quality and completeness that aligns your entire team. It’s the bedrock of predictable delivery and high-quality work. A clear Definition of Done is a critical component for optimizing your overall software project workflow and ensuring consistent, high-quality outputs.

This guide moves beyond generic advice to provide actionable templates and strategic insights. We’ll explore six battle-tested definition of done examples you can steal and adapt today. We will break down how to tailor these examples for different contexts, from hardcore engineering to user-focused design, ensuring every piece of work that gets the ‘done’ stamp is truly, unequivocally, finished. Get ready to transform your team’s process and finally agree on what ‘done’ really means.

1. Technical Quality Checklist DoD

Ah, the Technical Quality Checklist DoD. This is the “eat your vegetables” of the Agile world, the responsible adult in a room full of creative chaos. It’s a no-nonsense, comprehensive checklist that ensures your code isn’t just functional, but also robust, secure, and ready for the big leagues. This Definition of Done example focuses purely on the nitty-gritty technical standards, making sure every user story passes a rigorous engineering inspection before it can be called “done.”

Think of it as the ultimate quality gatekeeper. Before a feature can even dream of seeing a user, it must prove its worth against a gauntlet of technical criteria. This includes everything from code reviews and automated testing to performance benchmarks and security scans. This isn’t just about making things work; it’s about making them work well.

Strategic Breakdown: The Why and How

So, why go full-on “checklist commando”? Because it systematically eradicates the “it works on my machine” plague. It introduces a shared standard of quality that prevents technical debt from piling up and turning your beautiful codebase into a digital house of horrors.

- Who uses it? Engineering powerhouses like Microsoft’s Azure DevOps teams and Google’s internal software engineering groups live and breathe by these checklists. Netflix, famous for its resilience, embeds strict performance and reliability criteria directly into its DoD, ensuring your binge-watch session is never interrupted by shoddy code.

- Why does it work? It shifts quality from an afterthought to a core part of the development process. By automating checks within a CI/CD pipeline, teams catch issues early, reduce manual review time, and build a culture of accountability. The clarity of a checklist removes ambiguity, ensuring every developer knows exactly what’s expected. For a deeper dive into structuring these workflows, explore these in-depth process mapping techniques.

Key Insight: The true power of this DoD isn’t just the list itself, but the team-wide agreement and automation behind it. It turns abstract concepts like “quality” and “security” into concrete, verifiable actions.

Actionable Takeaways for Your Team

Ready to forge your own technical DoD? Here’s how to get started without boiling the ocean.

- Start Small, Win Big: Don’t try to implement Google’s entire security protocol on day one. Begin with the essentials: mandatory peer code review, 80% unit test coverage, and a successful build in the CI pipeline.

- Automate Everything Possible: Manually checking every item is a recipe for burnout. Use your CI/CD tools to automate tests, static code analysis, and security vulnerability scans. Let the robots do the heavy lifting.

- Make it a Living Document: A DoD isn’t a “set it and forget it” artifact. Use your team retrospectives to review and refine the checklist. Did a bug slip through? Maybe a new criterion is needed. Is a check causing more friction than value? Consider removing it.

2. User Acceptance Focused DoD

Welcome to the User Acceptance Focused DoD, the “does anyone actually want this?” sanity check of the development lifecycle. This is where the rubber meets the road, or more accurately, where your shiny new feature meets the discerning eye of the end-user. This Definition of Done example moves beyond technical perfection and asks the most critical question: does this solution actually solve the user’s problem and meet their expectations?

This approach ensures that a user story isn’t considered “done” just because the code is clean. It’s only done when the intended user or a key business stakeholder has given it their seal of approval. It’s about closing the feedback loop and ensuring the final product isn’t just built right, but is also the right build.

Strategic Breakdown: The Why and How

Why put users in the driver’s seat? Because it’s the most effective way to prevent building beautiful, functional features that nobody uses. This DoD bridges the gap between the development team’s interpretation and the product stakeholder’s vision, ensuring alignment and delivering genuine business value.

- Who uses it? Product-led giants live by this. Spotify runs rigorous user acceptance testing for new features, ensuring playlists and recommendations hit the right note with listeners. Airbnb’s product teams won’t ship a feature without extensive user testing and accessibility compliance, making sure the booking experience is seamless for everyone. Salesforce also leans heavily on this, with comprehensive demo and approval processes involving product stakeholders to validate every new function.

- Why does it work? It makes user value a non-negotiable gate. By baking user validation directly into the sprint, teams avoid the dreaded end-of-project reveal where stakeholders see the product for the first time and say, “This isn’t what I asked for.” It cultivates empathy, sharpens the team’s focus on user needs, and requires a solid stakeholder communication plan to keep everyone in sync. For a robust ‘User Acceptance Focused DoD’, exploring various user acceptance test examples can provide practical insights and templates for your team.

Key Insight: This DoD transforms “done” from a technical milestone into a value-based achievement. It forces teams to continuously ask “who are we building this for?” and “does it work for them?”, which is the cornerstone of true product-market fit.

Actionable Takeaways for Your Team

Ready to put your users at the center of your “done” definition? Here’s a practical roadmap.

- Define “Accepted” Upfront: Don’t wait until the end of the sprint to figure out what success looks like. Work with your Product Owner to write crystal-clear, measurable acceptance criteria for every user story before any code is written.

- Schedule “Demo Day” Religiously: Make sprint demos a mandatory, recurring ritual. Invite the same key stakeholders every time to build consistency and ensure they are actively involved in signing off on work.

- Build Your Feedback Machine: Create simple, reusable templates for user testing scripts and feedback collection forms. Establish a panel of friendly users or internal stakeholders you can tap for quick feedback cycles, removing friction from the validation process.

3. Deployment-Ready DoD

Welcome to the Deployment-Ready DoD, the final boss of “done.” This isn’t just about code that works; it’s about code that thrives in the wild. This Definition of Done example is laser-focused on ensuring every feature is not just functionally correct but is also fully prepared for a smooth, uneventful journey into production, complete with parachutes like monitoring, rollback plans, and operational support.

Think of this as your pre-flight checklist before launching a rocket. It guarantees that when you push the big red button, you’re not just hoping for the best. You’ve anticipated turbulence, planned for emergencies, and have all your instruments ready to report back on the mission’s health. This DoD bridges the gap between development and operations, making deployment a boring, repeatable, and safe event.

Strategic Breakdown: The Why and How

Why adopt this level of pre-deployment paranoia? Because it’s the ultimate defense against the dreaded “3 AM on a Saturday” production fire. It transforms deployment from a high-stakes gamble into a predictable, low-stress procedure by making operational readiness a non-negotiable part of the development cycle.

- Who uses it? This is the bread and butter of Site Reliability Engineering (SRE) and DevOps cultures. Amazon’s retail platform lives by this, with extensive readiness checks including load testing and dependency validation. Meta (Facebook) bakes comprehensive monitoring and phased rollback procedures into its DoD for every feature, while Etsy’s famous continuous deployment pipeline relies heavily on detailed operational readiness criteria.

- Why does it work? It forces teams to think about a feature’s entire lifecycle, not just its creation. By making logging, monitoring, and alerts part of the acceptance criteria, you build observability into the system from the ground up. This proactive approach minimizes downtime, speeds up incident response, and builds immense trust between development and operations teams.

Key Insight: A Deployment-Ready DoD redefines “completion” to include operational stability. The feature isn’t done until it can be safely managed, monitored, and, if necessary, gracefully removed from production.

Actionable Takeaways for Your Team

Ready to make your deployments as exciting as watching paint dry (in a good way)? Here’s how to build your own Deployment-Ready DoD.

- Create a Rollback Runbook: For every feature, ask: “How do we turn this off?” Document the steps. This could be a feature flag, a database script, or a process to revert the deployment. Make this runbook a mandatory deliverable.

- Standardize Your Monitoring: Don’t reinvent the wheel every time. Create standardized monitoring dashboards and alert templates for new features or services. A new API endpoint should automatically get a template for tracking error rates, latency, and request volume.

- Practice in a Safe Space: Regularly conduct deployment drills in a staging or pre-production environment. Practice the full deployment and rollback procedure. This builds muscle memory and exposes flaws in your process before they impact real users.

4. Cross-Functional Team DoD

Welcome to the ultimate team-up, the “Avengers, assemble!” moment of your product development cycle. The Cross-Functional Team DoD is a holistic pact where “done” isn’t decided in an engineering silo. Instead, it’s a shared milestone that requires a green light from development, design, QA, security, and even business stakeholders. This Definition of Done example transforms the handoff into a handshake, ensuring every angle is covered before a story is truly finished.

This approach breaks down walls and builds bridges. A feature is only complete when the UX designer confirms it matches the mockups, QA certifies it’s bug-free, and the product owner agrees it delivers the intended business value. It’s about creating a single, unified understanding of quality that spans every discipline involved in bringing an idea to life.

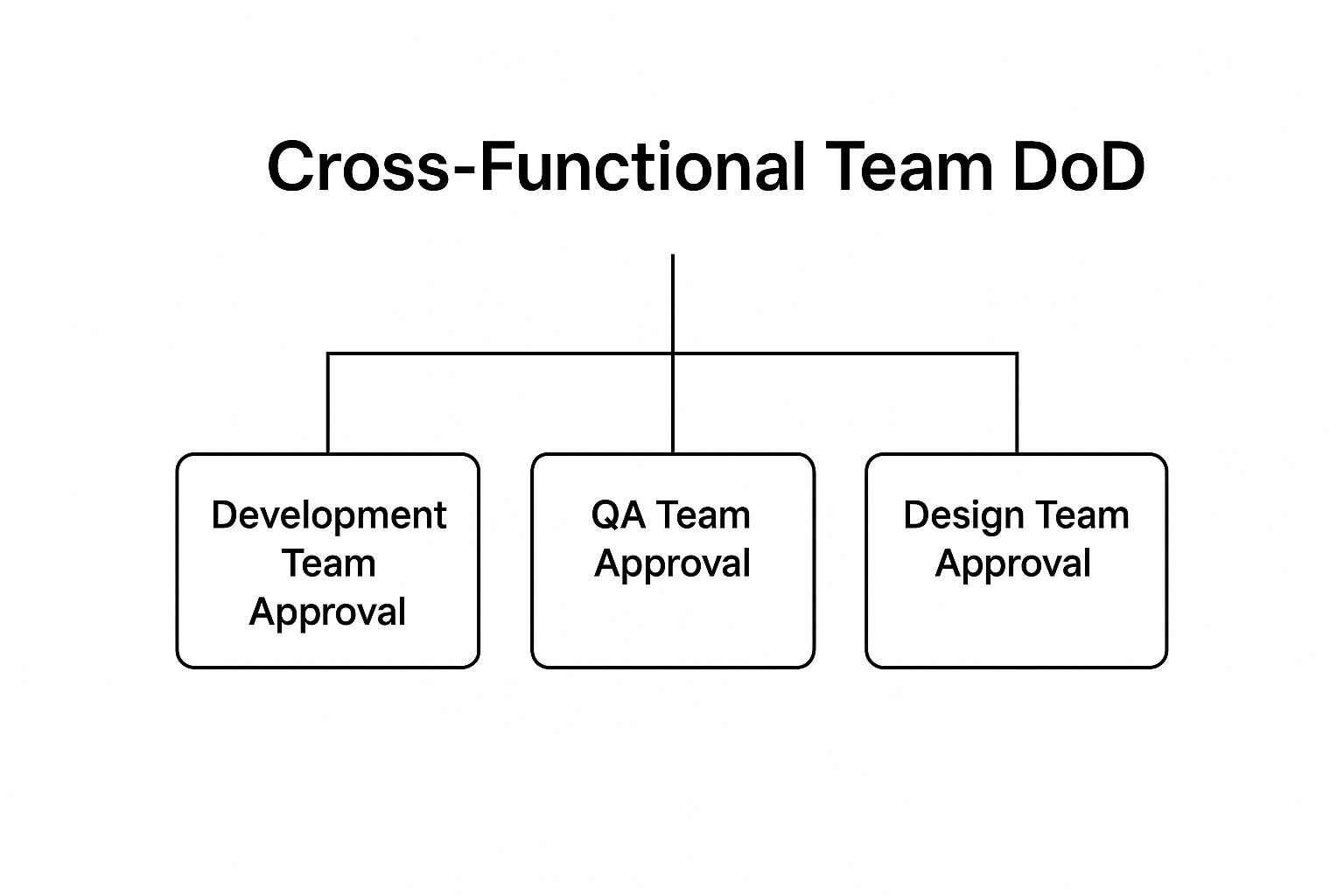

The infographic below shows a simplified hierarchy of the approvals needed for a story to be considered done, highlighting the parallel validation from key team functions.

This visual demonstrates that no single department holds the keys; instead, completion is a consensus reached through multi-disciplinary validation.

Strategic Breakdown: The Why and How

Why orchestrate this complex ballet of approvals? Because it prevents the dreaded “that’s not what I asked for” scenario. By involving everyone from the start, you ensure alignment and catch misunderstandings early, saving immense rework and fostering a powerful sense of collective ownership.

- Who uses it? Enterprise giants like IBM rely on multi-disciplinary gates for their complex software releases. Adobe’s Creative Cloud teams use rigorous cross-functional validation to ensure new features are seamless, while Slack’s entire product development ethos is built on this kind of deep, cross-team collaboration before anything ships.

- Why does it work? It institutionalizes communication. Instead of hoping the designer talks to the developer, this DoD mandates it. It makes quality a shared responsibility, not just QA’s problem. This model, often championed by Scaled Agile Framework (SAFe) practitioners, ensures that business value, user experience, and technical integrity are all given equal weight. Explore these concepts further to improve your team’s synergy with tips on cross-departmental collaboration.

Key Insight: A Cross-Functional DoD forces a holistic view of the product. It shifts the team’s focus from “my part is done” to “our goal is achieved,” which is a game-changer for building truly great products.

Actionable Takeaways for Your Team

Ready to assemble your product Avengers? Here’s how to implement a Cross-Functional DoD without causing chaos.

- Define Clear Roles: Document exactly what each function is responsible for approving. For example, Design approves UI/UX fidelity, QA approves bug-free functionality, and the Product Owner approves business requirement fulfillment.

- Use Collaborative Checklists: Create a shared checklist within your project management tool (like Jira or Trello) for each user story. Each discipline gets its own set of items to check off before the story can move to “Done.”

- Standardize Handoffs: Establish a simple, repeatable process for requesting reviews. This could be an automated notification in Slack or a dedicated status column on your Kanban board to signal that a story is ready for cross-functional review.

5. Minimum Viable Product (MVP) DoD

Welcome to the MVP DoD, the “just ship it” philosophy refined into a strategic weapon. This is the Definition of Done for the innovators, the disruptors, and anyone who believes that learning from real users is more valuable than perfecting a product in a vacuum. It ruthlessly strips away everything but the essential core functionality needed to test a single, critical hypothesis. It’s not about building less; it’s about learning faster.

This DoD redefines “done” not as feature-complete, but as “ready to learn.” The goal is to get a bare-bones version of your product into the hands of early adopters to validate your core idea. It prioritizes speed to market and feedback loops over a polished, all-encompassing feature set. Think less “finished masterpiece” and more “brilliant first draft.”

Strategic Breakdown: The Why and How

Why embrace such a lean, almost naked, approach to “done”? Because it’s the most effective way to avoid building something nobody wants. This DoD forces you to confront your riskiest assumptions head-on, using real-world data instead of internal speculation to guide your next steps.

- Who uses it? The Silicon Valley startup ecosystem was built on this. Dropbox famously started with a simple video demonstrating file sync because the product itself was too complex to build as an MVP. Twitter launched as a basic status-update service for an internal team, proving the concept before adding the features we know today.

- Why does it work? It shortens the build-measure-learn cycle to its absolute minimum. By defining “done” as “ready for feedback,” teams stop over-engineering and start validating. This approach ensures that every development effort is tied directly to a learning objective, preventing wasted resources and aligning the entire team on a path to product-market fit. This lean mindset is crucial when you start mapping out future development; explore these guides on how to create a product roadmap to see how MVPs fit into the larger picture.

Key Insight: An MVP DoD isn’t an excuse for a sloppy product. It’s a hyper-focused agreement on what “viable” means, ensuring the product is just stable, usable, and functional enough to successfully test the core hypothesis.

Actionable Takeaways for Your Team

Ready to launch and learn? Here’s how to craft an MVP DoD that gets you to market faster and smarter.

- Isolate the Core Hypothesis: What is the single most important assumption you need to test? Your MVP DoD must ensure the product can answer that one question. If a task doesn’t serve that primary goal, it’s not part of this DoD.

- Define “Viable,” Not “Perfect”: Your checklist should focus on the bare minimum for functionality, stability, and security. For example: “User can create an account,” “Core function X works without crashing,” and “Basic user data is secure.” Forget the bells and whistles for now.

- Plan for the Pivot: The whole point of an MVP is to learn. Your DoD should include criteria for collecting feedback, such as “in-app feedback form is live” or “analytics tools are tracking core user actions.” This makes your next move a data-driven decision, not a guess.

6. Regulatory Compliance DoD

Enter the Regulatory Compliance DoD, the one that makes your legal team sleep soundly at night. This is the heavyweight champion of “crossing your t’s and dotting your i’s,” a meticulously crafted gatekeeper for industries where one wrong move could lead to catastrophic fines, lawsuits, or worse. It’s a specialized Definition of Done example that ensures every feature, update, or product launch is fully compliant with all governing laws and industry standards.

This DoD is less about elegant code and more about ironclad evidence. It transforms vague legal requirements into a concrete, auditable trail. Before any user story can be marked as complete, it must pass a rigorous inspection against a fortress of compliance criteria, from data privacy laws like GDPR and HIPAA to financial regulations and aerospace safety standards. This isn’t just about following the rules; it’s about proving you followed them.

Strategic Breakdown: The Why and How

Why create a DoD that feels like it was co-written by a team of lawyers? Because in regulated industries, “done” means “defensible.” This approach systematically de-risks development by embedding compliance directly into the workflow, preventing last-minute scrambles and ensuring that every release is audit-ready from day one.

- Who uses it? This is standard operating procedure for companies where compliance is non-negotiable. Healthcare IT giants like Epic Systems build HIPAA validation into every feature. Financial powerhouses like JPMorgan Chase bake in extensive checks for financial regulations. Aerospace leader Boeing integrates FAA certification requirements directly into its software completion criteria.

- Why does it work? It makes compliance a shared responsibility, not just a problem for the legal department. By codifying requirements into checklists and automating checks where possible, teams can move quickly without sacrificing diligence. This builds a culture of “compliance by design.” For more on navigating this complex landscape, explore these insights on digital regulations and compliance.

Key Insight: A Regulatory Compliance DoD is more than a checklist; it’s a proactive risk management strategy. It turns legal obligations from a bottleneck into a repeatable, integrated part of your development process.

Actionable Takeaways for Your Team

Ready to build your compliance fortress? Here’s how to get started without getting lost in legal jargon.

- Involve the Experts Early: Don’t write your compliance DoD in a vacuum. Bring your legal and compliance officers into the conversation from the very beginning. They are your best resource for translating dense legal text into actionable checklist items for your team.

- Standardize and Template: Create a standardized compliance checklist template that can be attached to every relevant user story. This ensures consistency and makes the process repeatable. Include items like “Data encryption standards met,” “Audit trail generated,” and “Privacy impact assessment reviewed.”

- Automate Compliance Scans: Manual checks are prone to human error. Invest in tools that can automate parts of the compliance verification process, such as scanning for data privacy violations or checking against known security standards. This provides a faster, more reliable feedback loop.

- Treat It as a Living Document: Regulations change. Your DoD must evolve with them. Schedule regular reviews (e.g., quarterly) with your compliance experts to update your checklists and ensure they reflect the latest legal requirements.

Definition of Done: 6 Key Examples Compared

| DoD Type | Implementation Complexity | Resource Requirements | Expected Outcomes | Ideal Use Cases | Key Advantages |

|---|---|---|---|---|---|

| Technical Quality Checklist | High – requires tooling and setup | High – automation tools, peer reviews | High code quality, reduced technical debt | Teams focused on maintainability and reliability | Ensures technical standards and performance |

| User Acceptance Focused | Medium – user testing and demos | Medium – stakeholder coordination | High user satisfaction and acceptance | Features needing strong user validation | Aligns product with business and user needs |

| Deployment-Ready | High – deployment and monitoring | High – ops, infra, rollback testing | Stable, reliable production deployments | Critical production environments | Minimizes deployment risks and downtime |

| Cross-Functional Team | Very High – multi-discipline input | Very High – time from various teams | Comprehensive quality across disciplines | Large, complex product development | Improves collaboration and accountability |

| Minimum Viable Product (MVP) | Low – minimal feature set | Low – minimal resources | Fast market validation and feedback | Startups, innovation projects | Enables rapid delivery and iterative learning |

| Regulatory Compliance | Very High – strict documentation | Very High – compliance expertise | Legal compliance and audit readiness | Regulated industries (healthcare, finance) | Avoids legal risks, ensures standards adherence |

Crafting Your Perfect DoD: From Examples to Execution

So, you’ve journeyed through the wild and wonderful world of Definition of Done examples. We’ve dissected everything from the nuts-and-bolts Technical Quality Checklist to the laser-focused Minimum Viable Product (MVP) DoD. You’ve seen how different teams, whether they’re obsessed with regulatory compliance or focused on rapid deployment, tailor their criteria to conquer their unique challenges.

The big secret? There is no single “best” Definition of Done. The perfect DoD is not a relic you download from the internet and chisel into stone. It’s a living, breathing covenant your team makes with itself and its stakeholders. The definition of done examples we’ve explored are not prescriptive blueprints; they are your personal springboard for innovation.

The Real Takeaway: Your DoD is a Conversation, Not a Commandment

If you walk away with one thing, let it be this: a Definition of Done is a tool for empowerment, not a bureaucratic checklist designed to slow you down. Its primary purpose is to spark conversation, create alignment, and build a shared understanding of what “quality” and “complete” truly mean for your team.

Think of it as the ultimate team-building exercise. It forces you to answer the tough questions:

- What does quality look like for us? Is it bug-free code, pixel-perfect design, or flawlessly compliant documentation?

- What risks are we trying to mitigate? Are we worried about technical debt, poor user experience, or security vulnerabilities?

- How do we guarantee value? What is the absolute minimum standard a piece of work must meet to be considered a valuable increment for our customers?

The most effective teams don’t just set their DoD and forget it. They treat it like a strategic asset. They revisit it in every sprint retrospective, asking, “Did our DoD serve us well? Did anything slip through the cracks? How can we make it better?” This continuous refinement is where the magic happens, turning a simple checklist into a powerful engine for continuous improvement and a culture of collective ownership.

Your Action Plan: Stop Admiring, Start Building

It’s time to move from theory to practice. Don’t let the sheer variety of definition of done examples paralyze you. The goal is progress, not perfection.

Here are your next steps:

- Gather Your Crew: Schedule a meeting with your entire team. Yes, everyone. Devs, QA, designers, product managers, even stakeholders who are frequently involved.

- Pick a Starting Point: Which of the examples in this article resonated most? The Cross-Functional Team DoD? The User Acceptance Focused DoD? Use it as a conversation starter, not a final script.

- Ask the Magic Question: “What is one thing we could add to our DoD right now that would have prevented the biggest headache from our last sprint?”

- Write It Down and Make It Visible: Your DoD is useless if it’s buried in a forgotten Confluence page. Put it on a physical whiteboard, pin it to the top of your Slack channel, or build it directly into your project management tools. A DoD that isn’t seen is a DoD that doesn’t exist.

By transforming your DoD from a static document into a dynamic agreement, you’re not just shipping better products. You are building a more aligned, confident, and empowered team, ready to tackle any challenge that comes its way.

Are you tired of quality standards getting lost in translation between your product team in monday.com and your developers in Jira? Streamline your cross-platform workflow and embed your Definition of Done checklists directly where the work happens with tools from resolution Reichert Network Solutions GmbH. Bridge the gap and ensure a single source of truth for “done” by visiting resolution Reichert Network Solutions GmbH.