We recently did a survey to over two hundred users on the role of Automatic translations in support conversations (ATSC). Here’s the full report.

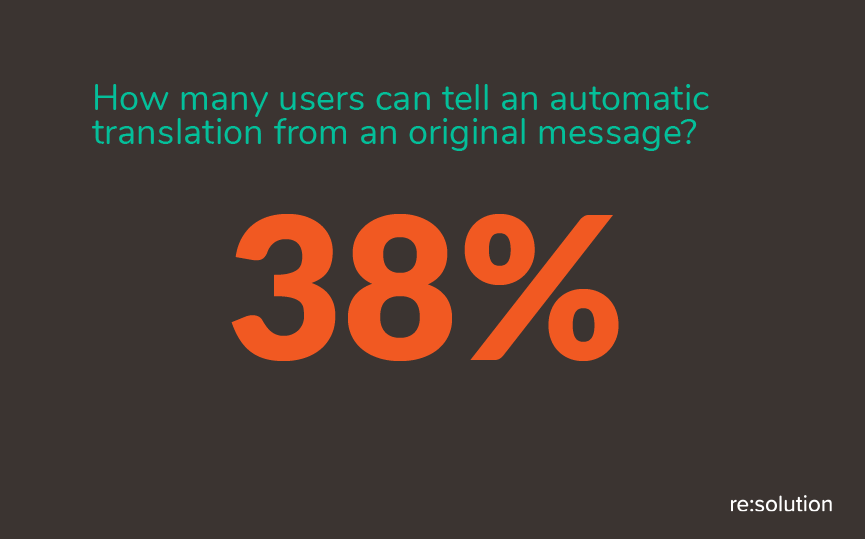

As a part of this project, we wanted to know if people can actually tell whether they’re reading an automated translation.

So we did a Turing Test to Google Translate.

If you’re not familiar with the term, let’s just say that a Turing Test is the method of determining if an artificial intelligence can behave so similarly to a person that it is mistaken with one.

Watch this Tedx talk if you want to know more:

Usually, a Turing Test is when a robot talks to a person. If the person doesn’t know they are talking to a machine, the robot has passed.

We needed to adapt some details. But we still have real people talking together. That conversation is happening in two different languages and it’s being translated by a machine.

Here’s how the experiment went.

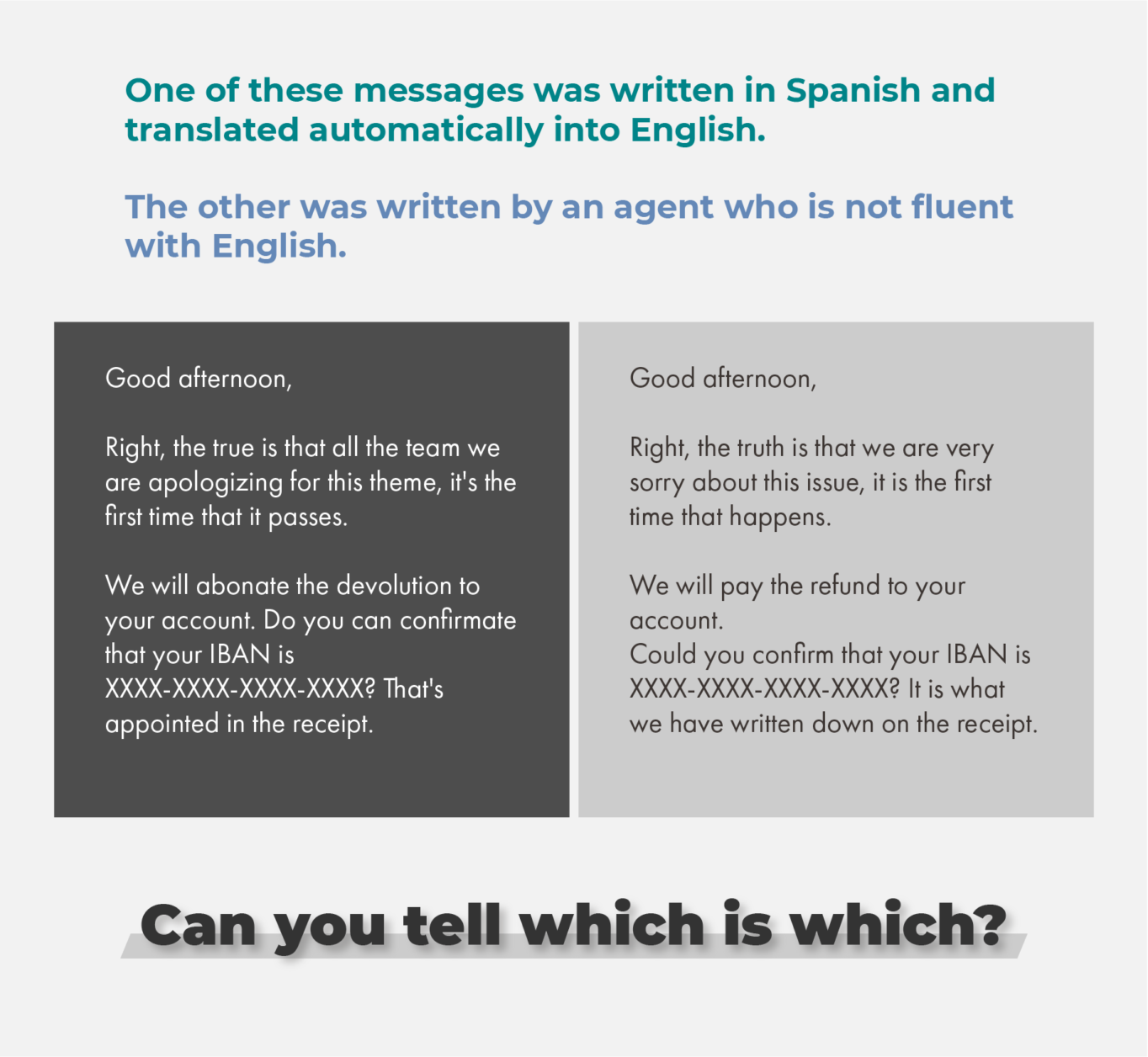

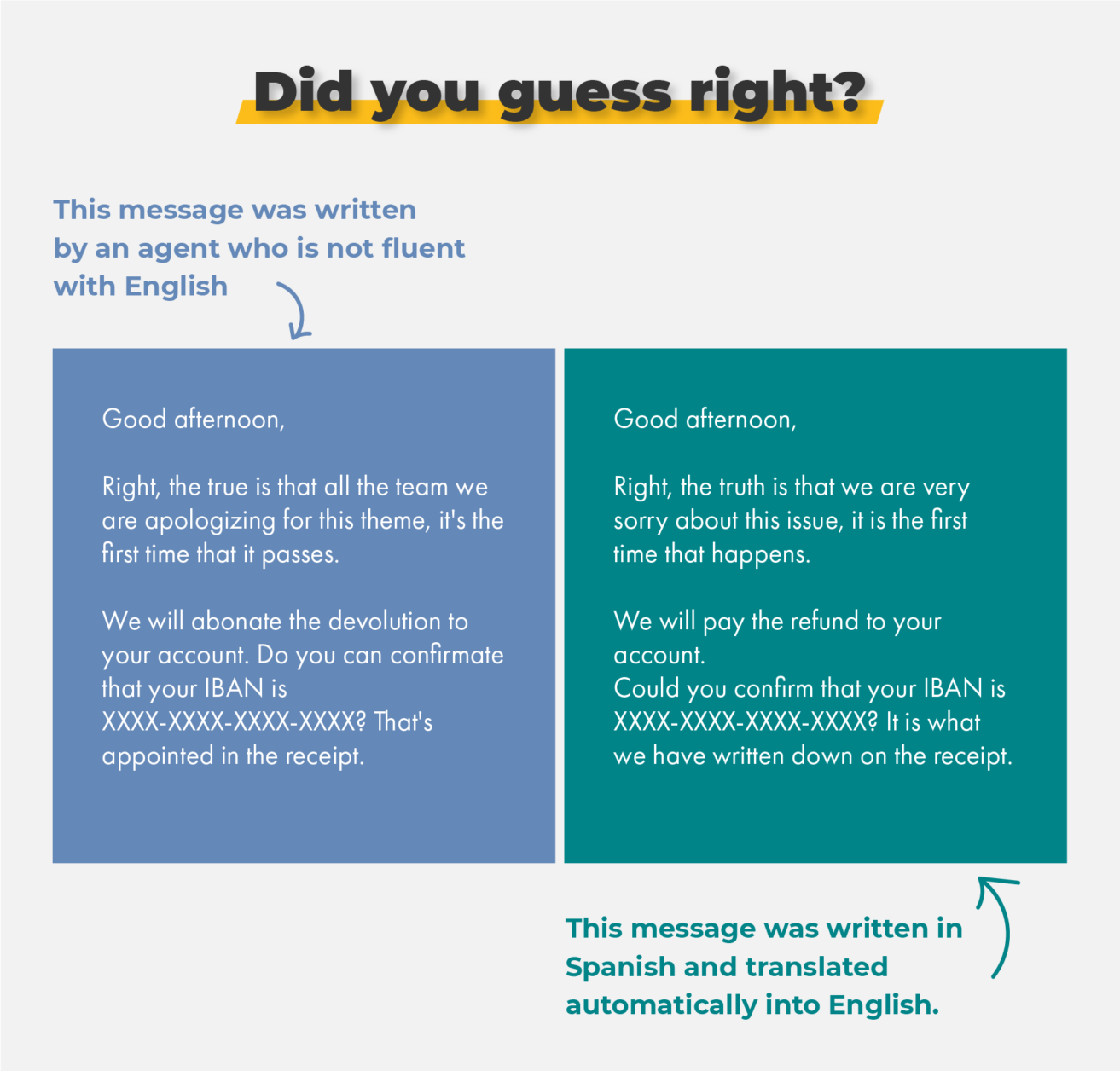

✉️ We showed these two messages

- One of them had been written in Spanish, then translated into English with Google Translate

- The other had been written in English by an agent with a lower-intermediate level.

🕵🏽 Users had to identify them

Users failed to identify the messages. Only 38% of users guessed right.

Which means that Google translate passed our Turing test!

🎯 They saw the results

Afterwards, we showed users which message had been automatically translated.

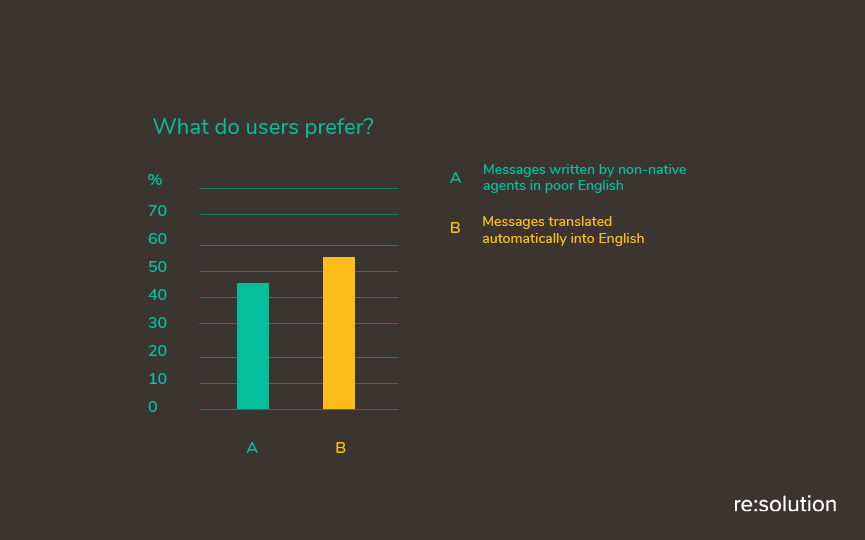

😋 We asked what they prefer

Finally, we asked users which message they would like to receive.

Most of them agreed that the automatic translation was better!

🤖 Don’t be biased against translation robots!

Next time somebody doubts that machine translations should be used at your company, tell them about our little experiment and dare them to prove us wrong. Have you tested automated translations? What went well and what went wrong?

If you want to automate the skill to support all of your customers in their own language, you can do a test with Language Translation for Jira Service Management.